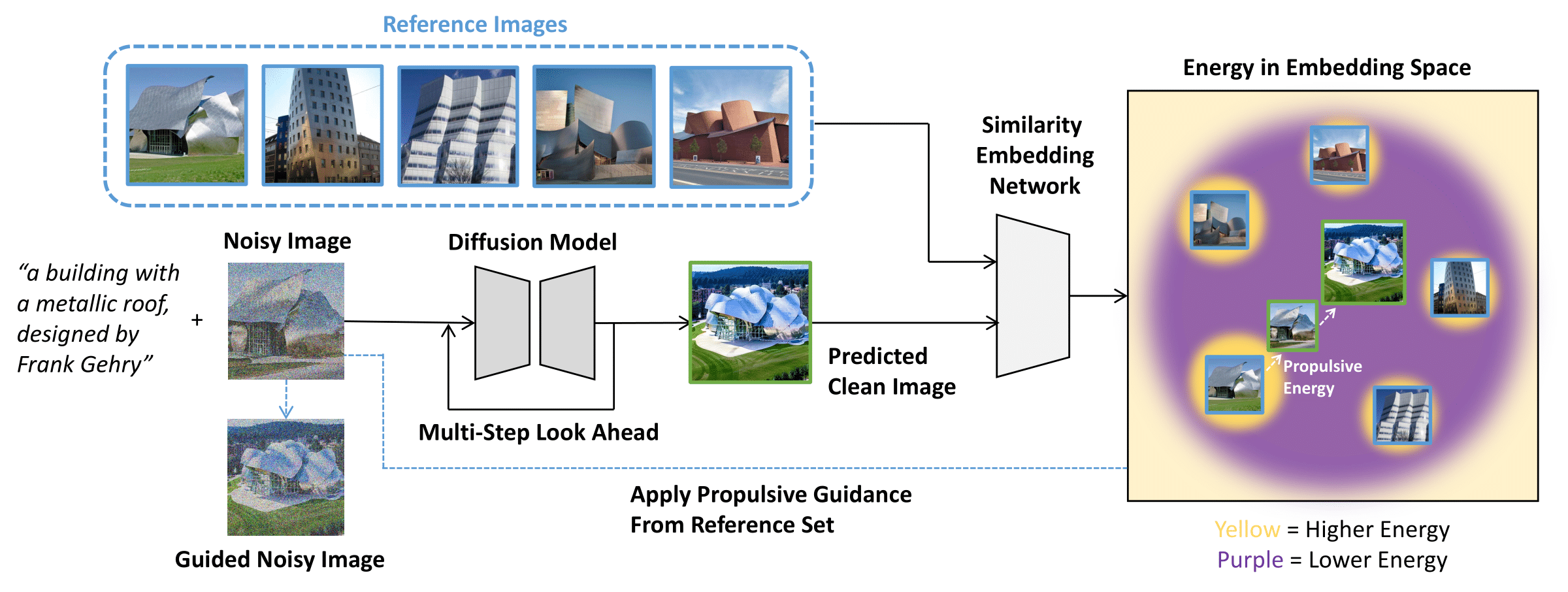

In this paper, we propose ProCreate, a simple and easy-to-implement method to improve sample diversity and creativity of diffusion-based image generative models and to prevent training data reproduction. ProCreate operates on a set of reference images and actively propels the generated image embedding away from the reference embeddings during the generation process. We propose FSCG-8 (Few-Shot Creative Generation 8), a few-shot creative generation dataset on eight different categories---encompassing different concepts, styles, and settings---in which ProCreate achieves the highest sample diversity and fidelity. Furthermore, we show that ProCreate is effective at preventing replicating training data in a large-scale evaluation using training text prompts.

At each denoising step of a pre-trained diffusion model, ProCreate applies propulsive guidance that maximizes the distances between the generated clean image and the reference images.

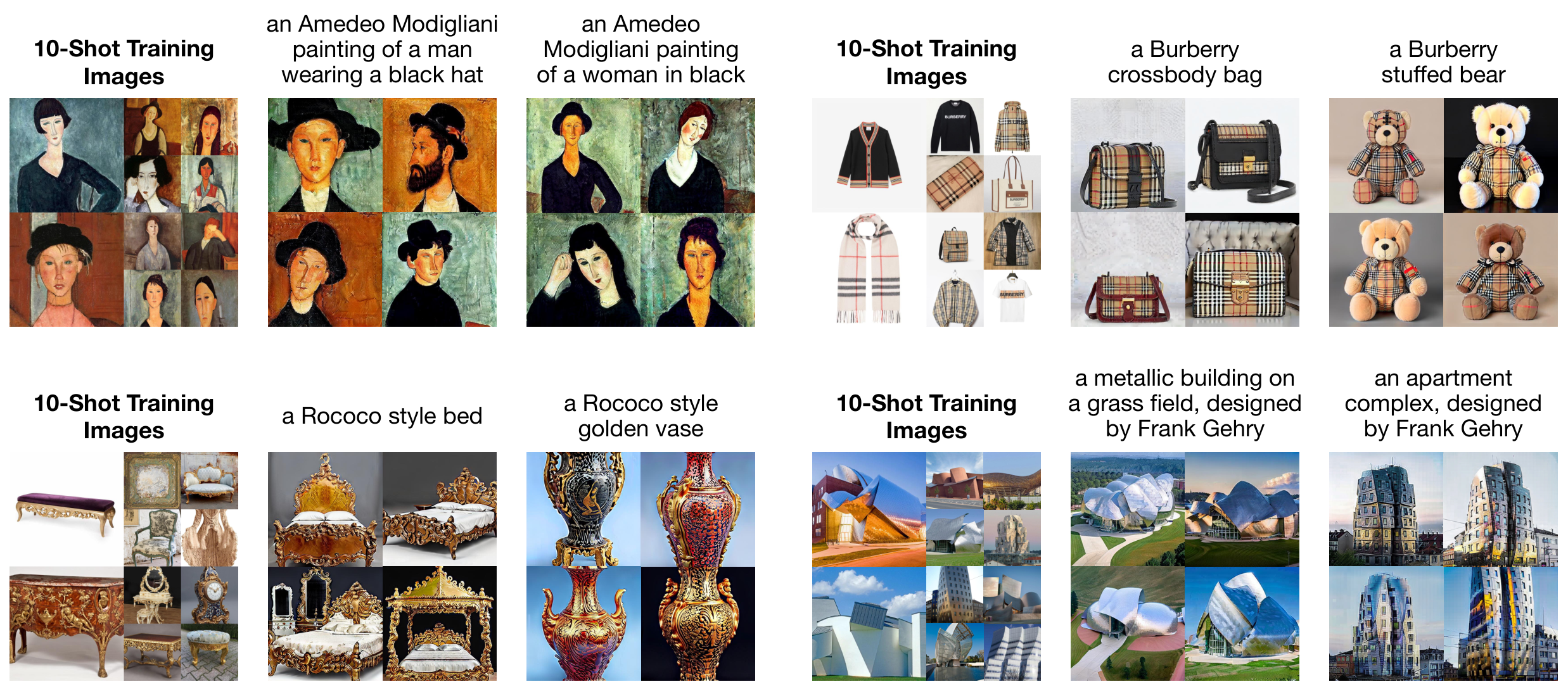

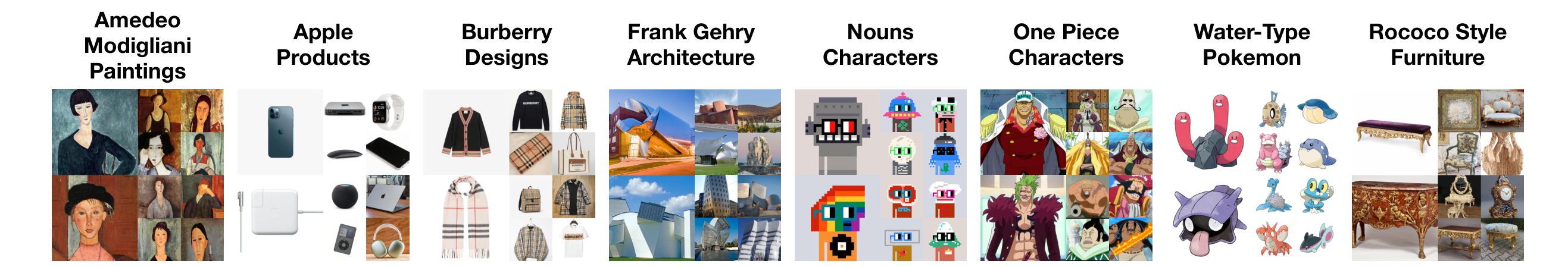

We collect dataset FSCG-8, fine-tune a Stable Diffusion checkpoint on each category of image-caption pairs, and compare the samples generated from DDIM, CADS, and ProCreate.

We curate a dataset that contains 8 categories with 50 image-caption pairs in each. Each category contains images that share properties like style, texture, and shape.

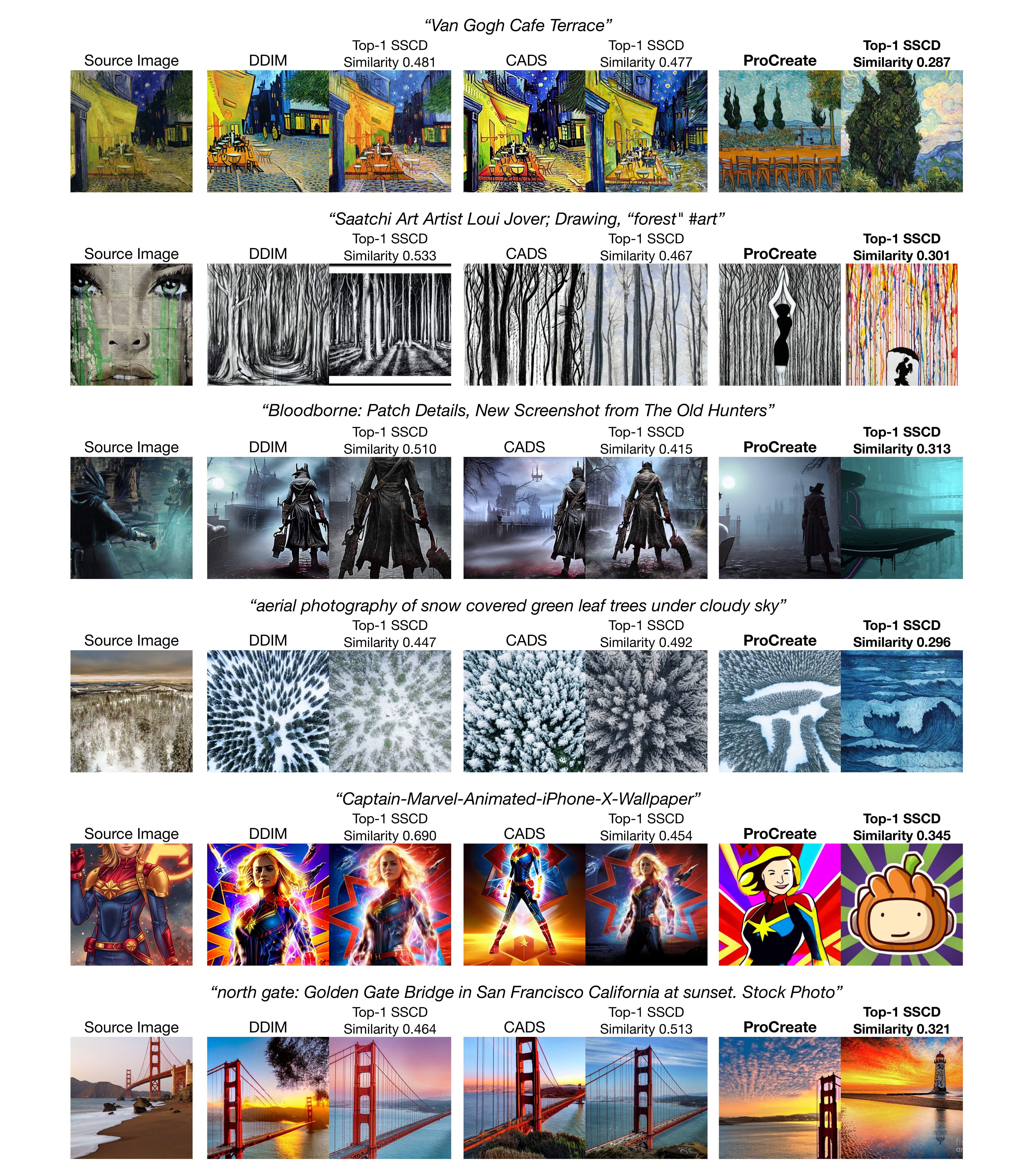

We show the qualitative comparison between DDIM, CADS, and ProCreate for few-shot creative generation on FSCG-8 with standard fine-tuning. For each sampling method, we show two prompts and four generated samples for each prompt. We also match each ProCreate sample with its most similar training image.

Recent studies show that large-scale models like Stable Diffusion are prone to replicating their training data, raising privacy and copyright concerns. We sample from Stable Diffusion with LAION captions and show that using ProCreate to guide samples away from the LAION images significantly reduces replication.

This research presents significant broader impacts. It offers content creators and designers the tools to enhance AI-assisted design with a smaller risk of replicating reference images, or private/copyrighted training images. Although the primary implications are beneficial, there exists a potential for this technology to facilitate the design of counterfeit products. Addressing the ethical use and regulatory oversight of such advancements warrants further discussion in future works.

@inproceedings{lu2024procreate,

title={ProCreate, Don't Reproduce! Propulsive Energy Diffusion for Creative Generation},

author={Jack Lu and

Ryan Teehan and

Mengye Ren},

booktitle={Computer Vision - {ECCV} 2024 - 18th European Conference, Milano,

Italy, September 29 - October 27, 2024},

year={2024},

}